Video basics

When trying to understand how video is being stored and displayed we have to go back in time and look at a very outdated technology: The cathode ray tube television. Without giving a physics course a TV tube is a big piece of glass and no air inside. Inside we have a cathode emitting electrons when you heat it up (that's why it takes a while for the picture to show when you turn on your TV, the cathode first has to be heated up to the appropriate temperature to emit electrons). There also is a strong electromagnetic field which accelerates the electrons towards the front of the tube and the same electromagnetic field is also used to position the electron beam (there's a lot of electrons being fired towards the front of the tube). The front of the tube is phosphor-coated and when electrons hit it light will be emitted on the other side (that's the side where you're sitting on). Below you can see a schematic of a CRT.

At first TVs were only black and white so one electron beam was enough. Now, in order to display a picture you have to write it all over the screen so the electron beam has to sweep over the whole screen. The sweeping frequency is commonly known as refresh rate. The refresh rate was chosen according to the cycles of the electric systems being used: North America and part of Japan use 60 Hz, Europe, the middle East and parts of Asia use 50 Hz. This resulted in 2 competing TV systems:

NTSC: National Television Standard Committee. Also nicknamed Never the same color because no two NTSC pictures look the same. The NTSC system has 525 horizontal lines of which roughly 487 can be seen on screen and has a refresh ratio of 60 Hz interlaced (I'll get to that later on).

PAL: Phase Alternating Line. The PAL system has 625 horizontal lines of which roughly 540 can be seen on screen and a refresh ratio of 50 Hz interlaced.

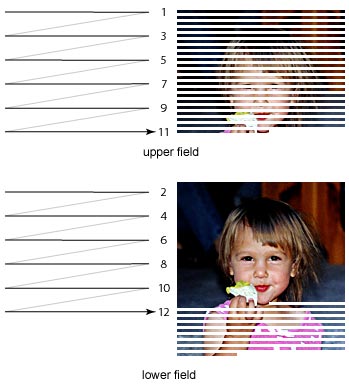

Now, at the time when TVs first came to the market the technology to write 525 or 625 lines 60 respectively 50 times per second was prohibitively expensive and not suited for the mass market. Reducing the refresh ratio would have required more complicated circuits and wasn't an option either - plus the human mind has a lower limit as to what it accepts as fluent motion. But the TV engineers had an idea: What if we only wrote every second line of the picture during a sweep, and wrote the other half during the next sweep? Doing that we only need 25 respectively 30 pictures per second (meaning less bandwidth used meaning more TV stations in the same frequency band), and the human eye will still accept it as fluent motion. This idea of splitting up the image into two parts became known as interlacing and the splitted up pictures as fields. Graphically seen a field is basically a picture with every 2nd line black (or white, whatever you like better). But here's an image so that you can better imagine what's going on:

During

the first sweep the upper field is written on screen. As you can see the first,

3rd, 5th, etc. line is written and after writing each line the electron beam

moves to the left again before writing the next line.

During

the first sweep the upper field is written on screen. As you can see the first,

3rd, 5th, etc. line is written and after writing each line the electron beam

moves to the left again before writing the next line.

As you can see on the left currently the picture exhibits a "combing" effect, it looks like you're watching it through a comb. When people refer to interlacing artifacts or say that their picture is interlaced this is what they commonly refer to.

Once all the odd lines have been written the electron beam travels back to

the upper left of the screen and starts writing the even lines. As it takes

a while before the phosphor stops emitting light and as the human brain is too

slow instead of seeing two fields what we see is a combination of both fields

- in other words the original picture.

When TVs finally got colored the interlacing technology stayed the same, but

a more sophisticated cathode ray tube was required. Instead of emitting just

one electron beam 3 electron beams in the colors red, green and blue are being

emitted. When you place dots of different colors close enough together the human

eye will no longer see individual dots but one single dot and will add the colors

to create a new color. Below you can see the schema of a color CRT.

TVs use an additive color system to display all kinds of colors. For more information on additive color mixing please refer to the RGB World Color Info article.

In the NTSC world the switch to color required another change: The refresh rate had to be slightly lowered from 60 Hz to 59.97 Hz (resulting in 29.97 pictures per second) to accommodate the colors - that's why we have this strange framerates in the NTSC world today.

Now before we proceed to look how they shoot Hollywood movies let's have a look at PC monitors. The traditional PC CRT displays are fundamentally different from TV screens. When PC first came to the market it was finally possible to write a whole picture per sweep - also known as progressive scanning (scanning because the electron beam "scans" each line from left to right). Early PC monitors still supported interlaced modes but the higher contrast and bright backgrounds gave us such a headache that these days we are fortunate enough that most screens don't even support interlaced mode of operation anymore. Today all PC screens write a picture like this:

Recently there have been TV screens which support a progressive scanning mode. These models are very rare though and require that they are fed a different signal as the traditional ways of connect your VCRs, DVD players or cameras to the TV does not support progressive images. LCD and plasma displays can only write progressive images - when you feed them an interlaced image it requires some technical tricks to display a reasonable picture. These techniques are commonly referred to as deinterlacing.

A last word about TVs before we proceed: As you might recall older TVs had tubes that were far from being flat. As it gets more and more complicated to write a geometrically accurate and precise image the farther away you are from the center of the tube (the point where the electron beam would go straight to the phosphor layer without any deviation) even today you won't see the full tube, the last few inches are hidden behind the TV casing. That's the reason why both TV formats have more lines than you can see, the rest of the lines is and will always stay hidden. But these lines are still used: TV channels transmit text pages in these lines, they can contain signals that screws the automatic gain controller of your VCR (the Macrovision analogue copy protection system), etc.

Before we can go into deinterlacing there's a few things you should know about how movies are being shot.

Most movies destined for a movie theater audience are shot on a material similar to what we use for traditional photography. In a second 24 pictures are made from a scene. So, theoretically you can shoot a movie with your photo camera, except that you have to switch films every 1 or 1.5 seconds (and photo cameras usually don't support making 24 photos per second;). When we watch these movies in the movie theater we get to see 24 pictures (also known as frames) per second. But when we buy these movies on VHS tapes or DVDs to watch them on our crappy TV screens we have a problem. PAL screens require 25 pictures per second and each picture has to be split into 2 fields. But as 25 isn't so much higher than 24 what we commonly do in PAL countries is that we take the original 24 fps (frames per second) movie and speed it up to 25 fps. This means that voices and music has a higher pitch and that the movie is somewhat shorter but unless you do an one to one comparison hardly anybody notices.

Now enter NTSC. Here we need 29.97fps. Speeding up the movie is no option as the speed difference would be too large for people not to notice. So what's being done is that after splitting up frames into fields certain fields are being repeated to obtain the higher framerate. Basically 4 frames are turned into 10 fields as shown below:

So, as

you can see from the image contrary to what you might think a higher framerate

doesn't mean more fluent motion - quite to the contrary NTSC is a bit more jerky

as some fields are being displayed twice (the first field of frame 2, and the

2nd field of of frame 4).

So, as

you can see from the image contrary to what you might think a higher framerate

doesn't mean more fluent motion - quite to the contrary NTSC is a bit more jerky

as some fields are being displayed twice (the first field of frame 2, and the

2nd field of of frame 4).

On the TV this isn't so much of a problem as crappy quality prevents us from noticing that something isn't quite right. But things change when we switch into the progressive world.

In order to display things progressively your display or playback device somehow has to turn the interlaced picture back into a progressive one. The easiest way to do that is to combine fields.

From the 10 fields you put the first two fields together to reconstruct frame 1, then the 3rd and 4th field to reconstruct frame 2. But then if you put the 5th and 6th field together you get neither frame 2 nor frame 3. This isn't so bad if there was no change from frame 2 to 3, but if the camera moves you'll be able to see some combing lines in the picture. And it can get even worse. Imagine there's a cut in between the two frames and frame 3 shows a completely different scene than frame 2. If you combine a field of one scene with a field of another scene what you have is a disaster. So, by simply combining fields back to frames not only would we get 2 out of 5 frames that are screwed with high probability, we also have a 29.97fps picture instead of the original framerate of the movie. Now, if we know how this process works we can undo it by simply discarding the duplicate fields. This process is called IVTC - InVerse TeleCine (thus the process of inserting duplicate fields is called Telecine). There are two good articles which explain telecine and IVTC in more detail: Video and Audio synching problems by Robshot which explains the creation of telecined content in more detail, and Force Film, IVTC, and Deinterlacing - what is DVD2AVI trying to tell you and what can you do about it by hakko504, manono and jiggimi. There's also my own guide on Decomb, the probably most popular IVTC utility.

Now that we have IVTC out of the way let's have a more detailed look at deinterlacing. First let me present the problem once again:

First we have two fields from an interlaced video scene:

As you can see - no interlacing artifacts visible. Now the corresponding frame:

Despite

the low quality JPEG you can see that there are same interlacing lines visible,

especially on the guy's clothes and arms.

Despite

the low quality JPEG you can see that there are same interlacing lines visible,

especially on the guy's clothes and arms.

And here's the even worse example where we have one field from one scene and

the 2nd field from another scene:

And the corresponding frame:

As

you can see that's not something we want to experience. What's also interesting

is the size of these images. The one on the left is more than 3 times as large

as the rest, and it still looks worse.

As

you can see that's not something we want to experience. What's also interesting

is the size of these images. The one on the left is more than 3 times as large

as the rest, and it still looks worse.

This also explains why storing interlaced pictures in progressive mode isn't a good idea. Lines take up a lot of space.

VCD and common MPEG-4 codecs (except XviD) support only progressive content. Thus storing something interlaced as shown on the left using such a compression technique isn't very efficient and we rather look for ways of turning interlaced material into progressive in a more efficient way than just combining the next 2 fields to a frame.

MPEG-2 and MPEG-4 advanced simple profile do have a special interlaced mode. In that mode all the lines from one field are taken together (leaving out the blanks) and compressed that way which saves a lot of bits that would otherwise be wasted to store the missing lines.

A last note on these screenshots: As this was taken from an interlaced DVD source and stored in interlaced mode the screenshots of the fields had to be stretched to their original size (remember that in interlaced mode we only encode the actual lines, and dump the blank ones) - in reality the fields would be half the vertical size of the frames.

Now that we have visually established our problem let's have a look at the possible

solutions. As illustrated taking the first two fields and combine them to a

frame isn't always possible. This is especially true when you're dealing with

content that has been edited when it was already in interlaced mode (that's

also problem number one when trying to IVTC, especially Anime content is cut

after telecining the film parts which results in almost insolvable IVTC problems).

One simple and quick way to get rid of the interlacing problem would be to take the field based content, resize the fields to full frame size (remember that a field has half the vertical resolution of a frame) and then dump every second field. This method is for instance used when you select Separate Fields in GordianKnot. But as a field has only half the vertical resolution of a frame we give up half of the vertical resolution in the process.

Now let's have a look at different deinterlacing techniques:

Weave: Takes 2 consecutive fields and puts them into a frame. This reduces the framerate by two but doesn't solve the problems shown above, the frame which has fields of two scenes overlayed remains the same.

Then we have blending: Here we take two consecutive frames, resize them to frame size, then put them on each other. If we have no motion this looks perfect but as soon as there's movement it starts to look unnatural and unsharp and it can leave a "ghostly trail".

Bob: In bob you enlarge each field to frame size and display it twice. As the first and second field do not start at the exact same position (remember that when we start at line 1 for field 1 and line 2 for field 2) the picture slightly bobs up and down which can be seen as a slight shimmering in stationary scenes.

There are a few more methods, like area based deinterlacing, motion blur and adaptive deinterlacing. Each has its pros and cons. 100fps.com has a nice comparison on all methods including good samples that show you the effects each filter has and has a nice feature comparison matrix. The site also guides you to create true 50fps progressive material from interlaced sources. If the site is too much to read for you (I doubt any Doom9 reader could ever say that but be that as it may) Gunnar Thalin's area based deinterlacer and DeinterlacePALInterpolation which is based on Thalin's filter are pretty good solutions when you need 25fps output. Then there's also Decomb's field deinterlace which proves to be quite effective.

Though before you deinterlace try swapping the field order first. DVD2AVI has a function for it (Video - Field Operations - Swap Field order) and so does AviSynth (SwapFields). Quite often that can solve your interlacing issues, especially when the main movie appears to be interlaced.

Parting words: This is by no means a complete technical description and it was written trying to recall all the classes in physics and video compression I have taken in high school and college. I hope my memory hasn't failed me too miserably.

This document was last updated on November 24, 2003